In my previous post on text manipulation I discussed the process of OCR and text munging to create a list of chapter contents. In this post I will investigate what can be done with a data-frame, and future posts will discuss using a corpus, and Document Term matrix.

Each chapter is an XML file so read those into a variable and inspect:

1 |

|

Create a dataframe that will provide the worklist through which I will process, as well as hold data about each chapter. The dataframe will contain a row for each chapter and tally information such as:

- bname: base name of the chapter XML file

- chpt: chapter number

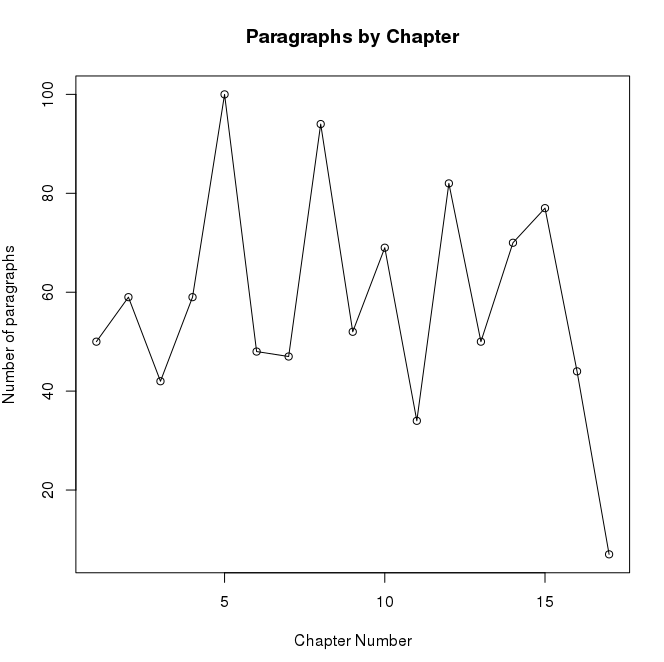

- paragraphs: number of paragraphs

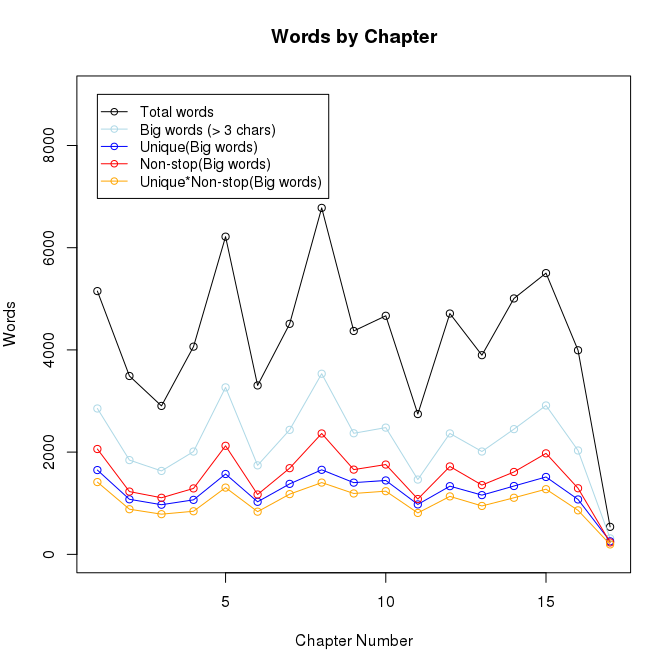

- total: total number of words

- nosmall: number of small (<4 characters) words

- uniques: number of unique words

- nonstop: number of non-stop words

- unnstop: number of unique non-stop words

1 |

|

I will read the chapter XML files into a list and at the same time count the number of paragraphs per chapter:

1 | chpts <- vector(mode="list", length=nrow(d)) |

Each quote from a character is given its own paragraph, so a high paragraph count is indicative of lots of conversation.

Next create a list for each parameter I would like to extract. Stop words are provided by the tidytext package

1 | library(tidytext) |

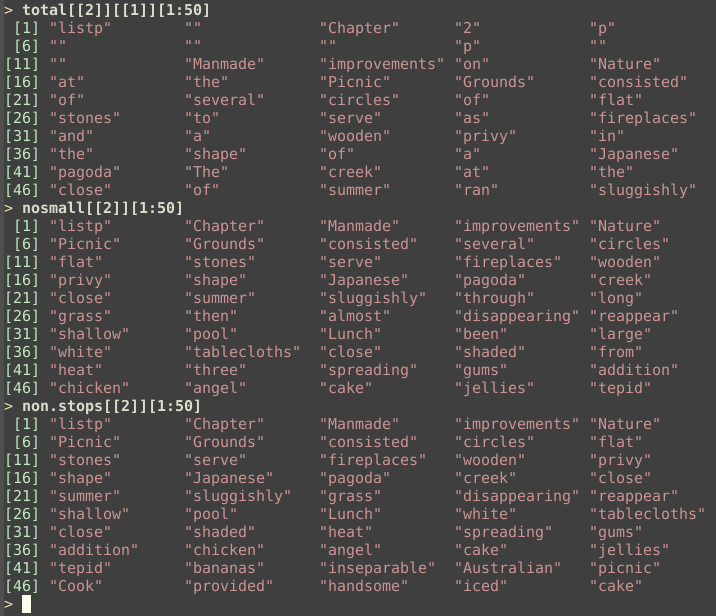

The word count trends are the same for all categories, which is expected. I am interested in the “Non-stop(Big words)”, the red line, as I don’t want to normalize word dosage i.e. if the word “happy” is present 20 times, I want the 20x dosage of the happiness sentiment that I wouldn’t get using unique words. To visually inspect the word list I will simply pull out the first 50 words from each category for chapter 2:

Comparing nosmall to non.stops the first two words eliminated are words 9 and 24, “several” and “through”, two words I would agree don’t contribute to sentiment or content.

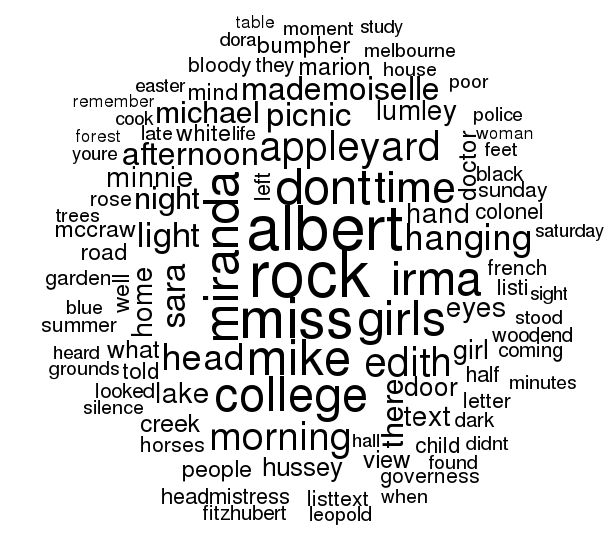

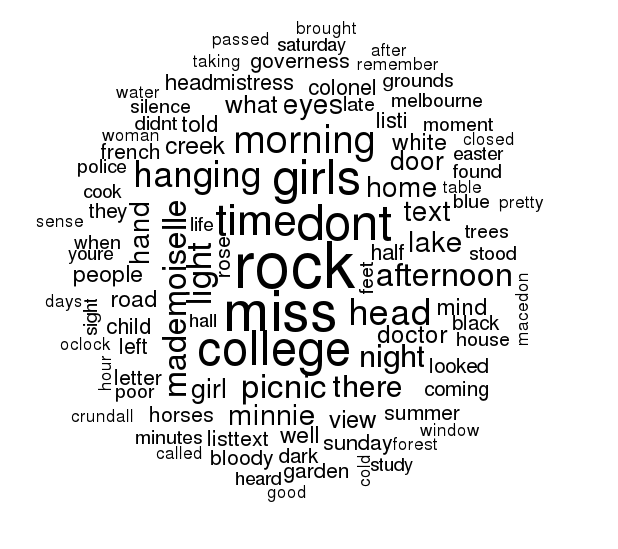

Next I will make a wordcloud of the entire book. To do so I must get the words into a dataframe.

1 | word <- non.stops[[1]] |

Appropriately “rock” is the most frequent word. The word cloud contains many proper nouns. I will make a vector of these nouns, remove them from the collection of words and re-plot:

1 | > prop.nouns <- c("Albert","Miranda","Mike","Michael","Edith","Irma","Sara","Dora","Appleyard","Hussey","Fitzhubert","Bumpher","Leopold","McCraw","Marion","Woodend","Leopold","Lumley" ) |

In the next post I will look at what can be done with a corpus.